Open-set Object Detection on Instagram Images

After the image collection is finalized, the next action is the identification of objects in the gathered images. The focus of this analysis is on fashion-related items; therefore, it is essential to mitigate the effects of background elements and items unrelated to fashion.

This session will focus solely on object detection, reserving the task of filtering out non-fashion-related objects for the next phase.

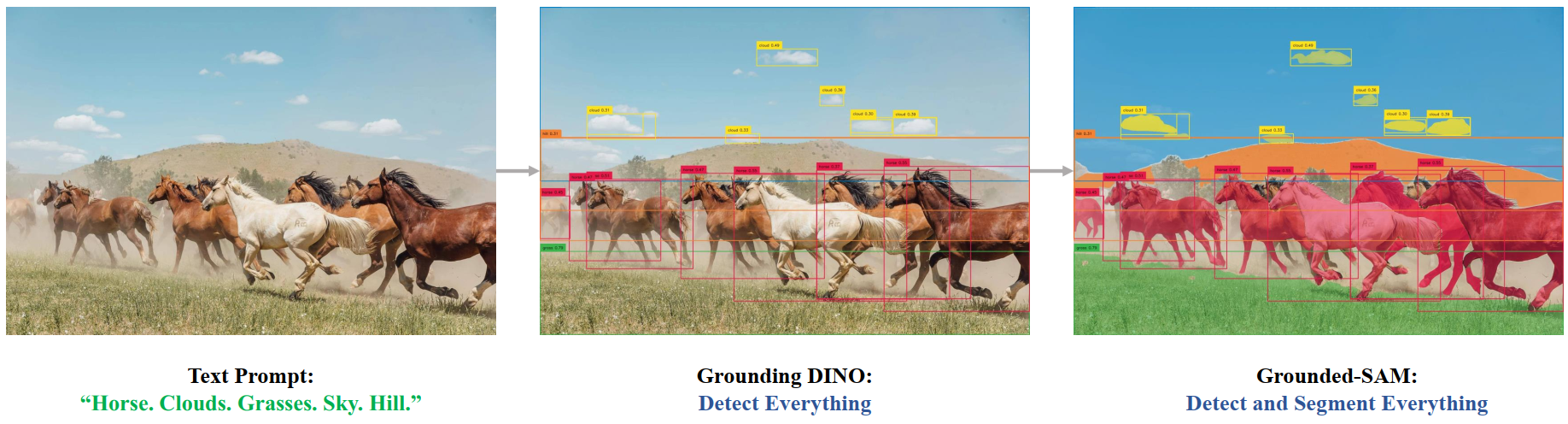

Setup Grounded-Segment-Anything

Thanks to the contributions of the open-source community, a variety of open-set object detection models are now freely available. For this particular use case, I primarily utilize the pipelines from Grounded-Segment-Anything, incorporating several customized modifications to align with specific project needs.

Image source: Grounded-Segment-Anything

To start, it’s necessary to configure the required environment and dependencies following the guidelines provided in Grounded-Segment-Anything.

For this specific use case, setting up RAM (Recognize Anything Model) and GroundingDINO is sufficient. To create a local GPU environment for GroundingDINO without using Docker, manual configuration of the environment variable is required. Detailed guidance can be found in this GitHub issue: issue-360.

The pretrained models can be accessed via the links below. These models should be downloaded to a local directory for subsequent use.

| Model | Pretrained Model Link |

|---|---|

| RAM (Recognize Anything Model) | ram_swin_large_14m |

| GroundingDINO | groundingdino_swint_ogc |

Pipeline Overview

The major steps are:

- Load and process the image for

RAMandGroundingDINOmodels. - Get predicted tags using

RAM. - Obtain bounding boxes and phrases from

GroundingDINObased on the predictedtagsfrom the previous step. - Process bounding boxes and phrases for the final output.

The simplified version of the inference process is presented as below:

def inference(self, image_path, res_path, debug=False):

# Load and process image

image_pil, image_gdino, image_ram = self.process_image(image_path)

# Recognize Anything Model

tags = self.get_auto_tags(image_ram)

# GroundingDINO

bboxes, scores, phrases = self.get_grounding_output(image_gdino, tags)

bboxes_processed, phrases_processed = self.process_bboxes(

image_pil, bboxes, scores, phrases

)

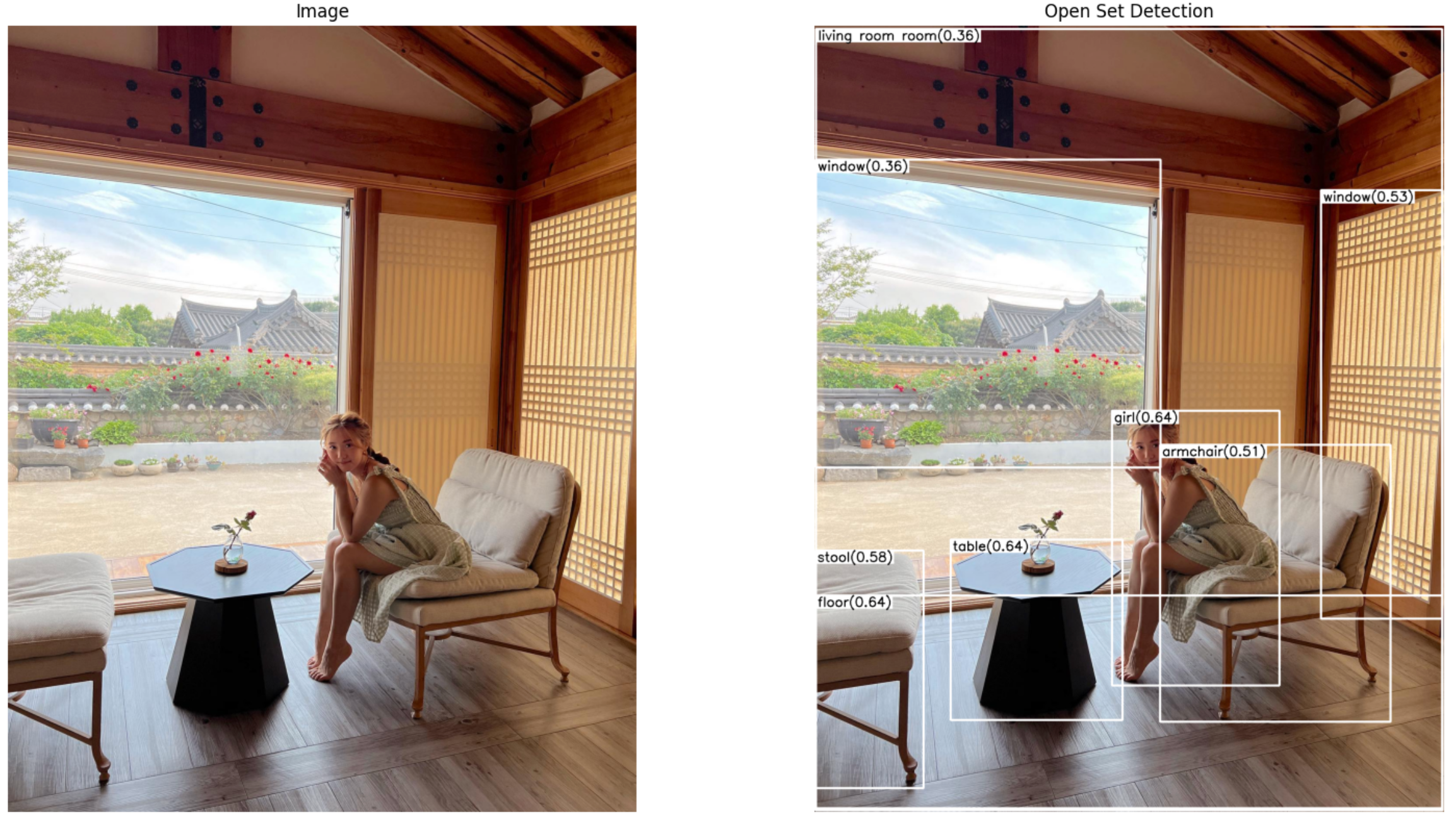

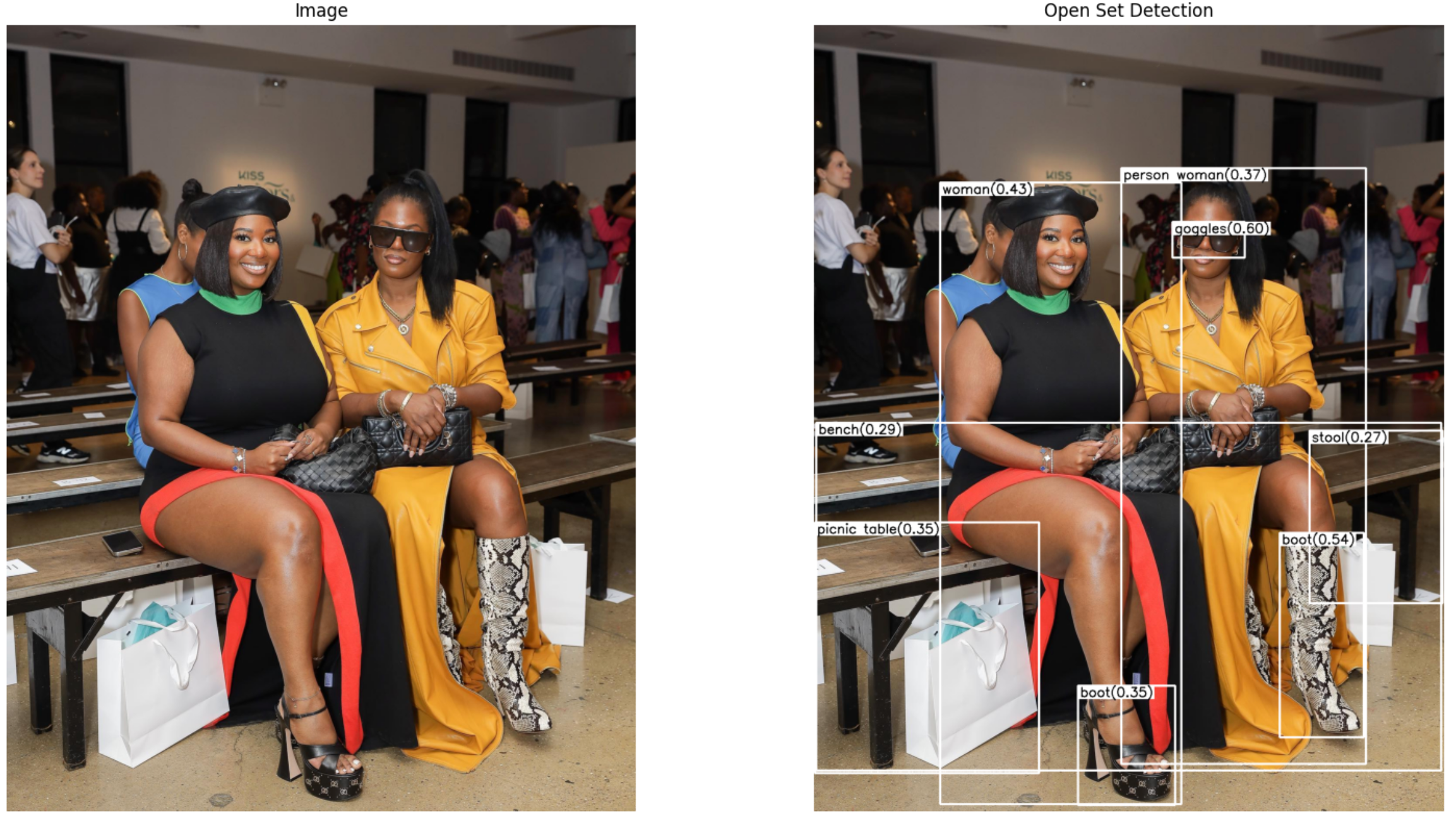

Results

The following are sample outcomes produced by the pipeline. The final results comprise a list of bounding boxes and their corresponding tags, depicted on the right side of each image. Additionally, the initial tags predicted by RAM are displayed at the bottom of each example for reference.

RAM Tags: armchair, chair, couch, table, floor, girl, hassock, living room, relax, room, sit, stool, window, woman

RAM Tags: bush, dress, flower, garden, goggles, jumpsuit, pink, rose, sleepwear, stand, sunglasses, walk, wear, woman

RAM Tags: bench, boot, dress, event, goggles, person, picnic table, sit, stool, woman

The advantage of using an open-set detection model is its ability to identify a wide range of objects without predefined taxonomies. However, this approach also presents a significant challenge. Since the focus is on fashion-related objects, there’s a need to selectively filter out the relevant objects. Giving the output tags are in free-form text, the filtering process is much more challenging.

The final result shows that, out of the 30,179 images processed, objects were detected in 29,937 of them, resulting in a total of 182,123 identified objects. The presence of 4,561 unique tags makes it impractical to manually filter out fashion-related objects. Therefore, it is essential to develop a strategy that groups similar tags into broader categories, facilitating more efficient processing and analysis.

| Most Frequent Tag | Count | Least Frequent Tag | Count |

|---|---|---|---|

| woman | 13874 | control mp3 player | 1 |

| dress | 6688 | oval pendant | 1 |

| sandal | 5060 | convenience store | 1 |

| girl | 3664 | outlet | 1 |

| goggles | 3454 | outhouse | 1 |

| man | 3428 | origami | 1 |

| hand | 3402 | orchid plant | 1 |

| shoe | 2625 | convenience store storefront | 1 |

| person | 2562 | conversation couple | 1 |

| stool | 2561 | zoo | 1 |

Implementation Details

Input

| Name | Description |

|---|---|

ins_posts/<username>/images | Images of Instagram posts |

Process

| Code | Description |

|---|---|

codes/open-set_detection/inference.ipynb | Open-set object detection inference |

Output

| Name | Description |

|---|---|

ins_posts/<username>/bboxes | New bboxes folder under the same root directory as the images folder, containing the detection results |

Folder Structure:

ins_posts_3

├── AylaDimitri

│ ├── AylaDimitri_posts.json

│ ├── AylaDimitri_profile.json

│ ├── bboxes

│ │ ├── 008a4a567a8c4a5f5f0cb06ec0dc92e8.json

│ │ ├── ...

│ │ └── fcff621d25e77ac24889686453e1befe.json

│ └── images

│ ├── 008a4a567a8c4a5f5f0cb06ec0dc92e8.jpg

│ ├── ...

│ └── fcff621d25e77ac24889686453e1befe.jpg

└── xeniaadonts

├── bboxes

│ ├── 07d8c562f6d1ee6f1a2bdb1453e912d7.json

│ ├── ...

│ └── fbeab7c9d911db651ad4bc1d3bc25062.json

├── images

│ ├── 07d8c562f6d1ee6f1a2bdb1453e912d7.jpg

│ ├── ...

│ └── fbeab7c9d911db651ad4bc1d3bc25062.jpg

├── xeniaadonts_posts.json

└── xeniaadonts_profile.json

Result Sample:

{

"bboxes": [

[

819.04150390625,

450.6321105957031,

984.864013671875,

532.0742797851562

],

[

1132.3551025390625,

928.917236328125,

1436.1605224609375,

1323.56005859375

]

],

"tags": [

"goggles(0.60)",

"stool(0.27)"

]

}