Clustering on Selected Objects

Handling a Large Embedding Matrix

As discussed in the object embedding session, the feature embedding dimension is set at 1,792. Given the task of clustering 61,751 objects, this translates to a considerably large embedding matrix with dimensions of 61,751 x 1,792. Managing such an extensive matrix in memory presents a significant challenge.

NumPy, however, offers an effective solution to address this issue. By leveraging numpy.memmap, it’s possible to create a memory-mapped file that links to an array stored in a binary file on disk. Using the "w+" mode in numpy.memmap, individual embedding files can be loaded and subsequently appended to this memory-mapped file.

This approach allows for efficient handling of large data arrays by breaking down the loading and processing into more manageable segments, effectively circumventing the limitations of in-memory operations.

Efficient Clustering with MiniBatchKMeans

As noted in the text clustering session, Hierarchical Agglomerative Clustering (HAC) offers intuitiveness by allowing the setting of a distance_threshold rather than specifying the number of clusters.

However, HAC is notoriously known for its lack of scalability. Given the current task of clustering a substantial number of objects — specifically 61,751 — it becomes essential to opt for a more efficient and scalable clustering algorithm.

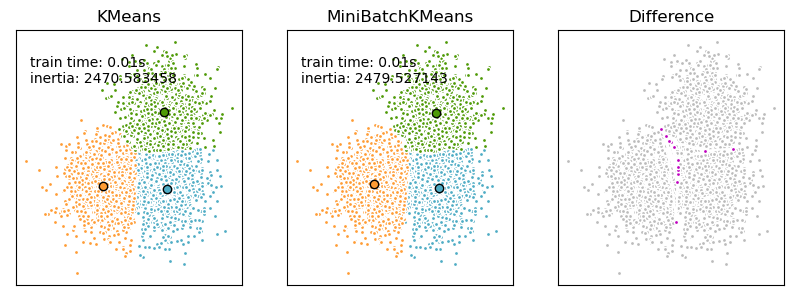

Image source: MiniBatchKMeans: Mini-Batch K-Means clustering.

A suitable solution is the MiniBatchKMeans algorithm, an adaptation of the KMeans algorithm designed to enhance computational efficiency. MiniBatchKMeans employs mini-batches to reduce computation time while still aiming to optimize the same objective function as KMeans.

The key advantage of MiniBatchKMeans lies in its ability to perform partial_fit on mini-batch data. This capability aligns perfectly with the use of pre-stored memory mapping files, allowing for batch processing of the clustering task.

By using the "r" mode for memory mapping, batches of embeddings can be sequentially loaded from the memory-mapped file, with partial_fit applied to each batch.

Once the entire embedding matrix has been processed, the matrix can be reloaded in batches to perform the predict function. This step assigns each object to its most appropriate cluster based on the learned clustering model.

Clustering Results

The number of clusters (n_clusters) is set to 600, with the goal of having approximately 100 samples in each cluster, considering the overall count of 61,751 objects.

The top 10 clusters with the most samples as well as the tail 10 clusters with the least samples are listed below:

| Top Clusters | Num Samples | Tail Clusters | Num Samples |

|---|---|---|---|

| 152 | 512 | 222 | 1 |

| 61 | 493 | 192 | 1 |

| 202 | 440 | 328 | 1 |

| 233 | 422 | 551 | 1 |

| 24 | 420 | 596 | 1 |

| 48 | 355 | 284 | 1 |

| 186 | 347 | 584 | 1 |

| 157 | 341 | 540 | 1 |

| 132 | 339 | 429 | 1 |

| 47 | 338 | 513 | 1 |

It’s essential to recognize that while MiniBatchKMeans offers efficiency, the clustering results obtained are initial and somewhat provisional. The determination of n_clusters is an approximation, and the k-means algorithm’s inherent nature of using all samples for cluster calculations might lead to the inclusion of potential outliers. This aspect underscores the need for a careful interpretation of the clustering outcomes.

Post-processing

Post-processing involves several key steps to refine the clustering results, using the euclidean distance and cosine similarity measurements calculated between each sample and its respective cluster center.

- Removing Duplicate Images within Clusters: To avoid redundancy (like having both left and right shoes in the same cluster), duplicate image names are removed from each cluster. This action reduces the overall sample count to 54,310.

- Filtering Based on Cosine Similarity: Samples with a cosine similarity greater than 0.7 are retained. This filtering narrows down the clusters to 585, containing 20,815 objects in total.

- Retaining Clusters with Sufficient Samples: Clusters with more than 10 samples are kept. This final step results in 339 clusters with a collective count of 20,030 objects.

These steps ensure the clustering results are more coherent and relevant for further analysis and applications.

The top and tail 10 clusters after post-processing are listed below:

| Top Clusters | Num Samples | Tail Clusters | Num Samples |

|---|---|---|---|

| 152 | 497 | 469 | 11 |

| 61 | 460 | 221 | 11 |

| 575 | 278 | 134 | 11 |

| 556 | 267 | 495 | 11 |

| 157 | 244 | 421 | 10 |

| 57 | 240 | 68 | 10 |

| 430 | 239 | 33 | 10 |

| 48 | 234 | 218 | 10 |

| 132 | 231 | 102 | 10 |

| 347 | 216 | 15 | 10 |

Observing the shifts in top clusters after post-processing is insightful. The changes underscore the efficacy of the post-processing steps in retaining compact clusters. These clusters are composed of objects that not only closely resemble each other but also have a sufficient number of samples to be statistically significant.

| Original Top Clusters | Original Num Samples | After Post-processed | New Top Clusters | Original Num Samples | After Post-processed |

|---|---|---|---|---|---|

| 152 | 512 | 497 | 152 | 512 | 497 |

| 61 | 493 | 460 | 61 | 493 | 460 |

| 202 | 440 | 0 | 575 | 328 | 278 |

| 233 | 422 | 146 | 556 | 288 | 267 |

| 24 | 420 | 135 | 157 | 341 | 244 |

| 48 | 355 | 234 | 57 | 258 | 240 |

| 186 | 347 | 158 | 430 | 276 | 239 |

| 157 | 341 | 244 | 48 | 355 | 234 |

| 132 | 339 | 231 | 132 | 339 | 231 |

| 424 | 338 | 94 | 347 | 282 | 216 |

Analyzing specific clusters can provide valuable insights into the types of objects that are excluded during post-processing.

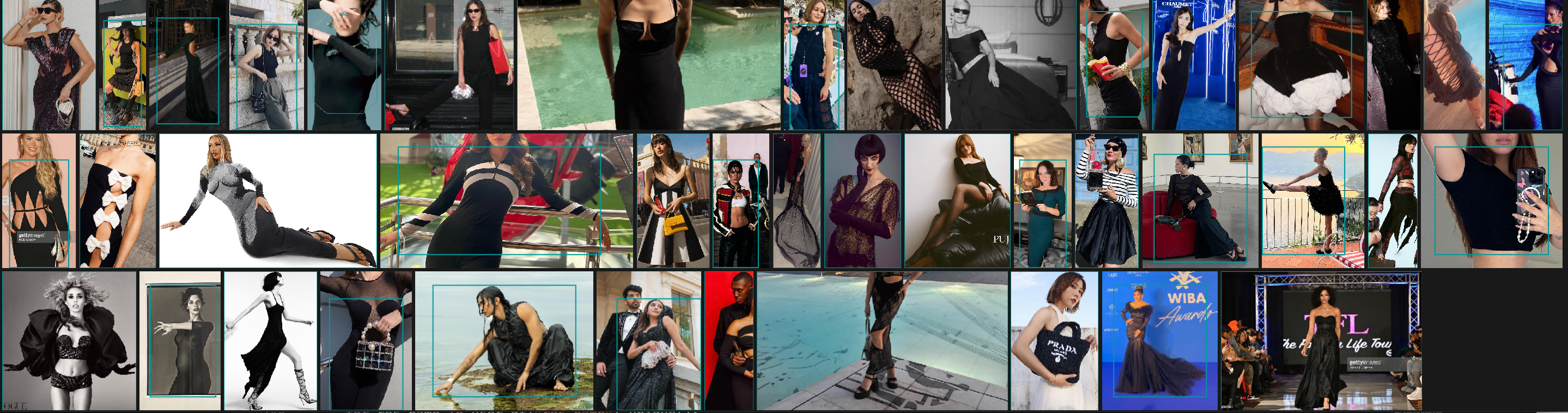

Take cluster 202, for instance, which is entirely eliminated after post-processing. This suggests that the cluster may have been noisy, containing a diverse or unrelated set of objects that did not cohere well as a group.

Samples from Cluster 202.

Reviewing the top and tail samples by cosine similarity provides a clear picture of the cluster’s range and helps to validate the effectiveness of the post-processing steps in refining the overall clustering results.

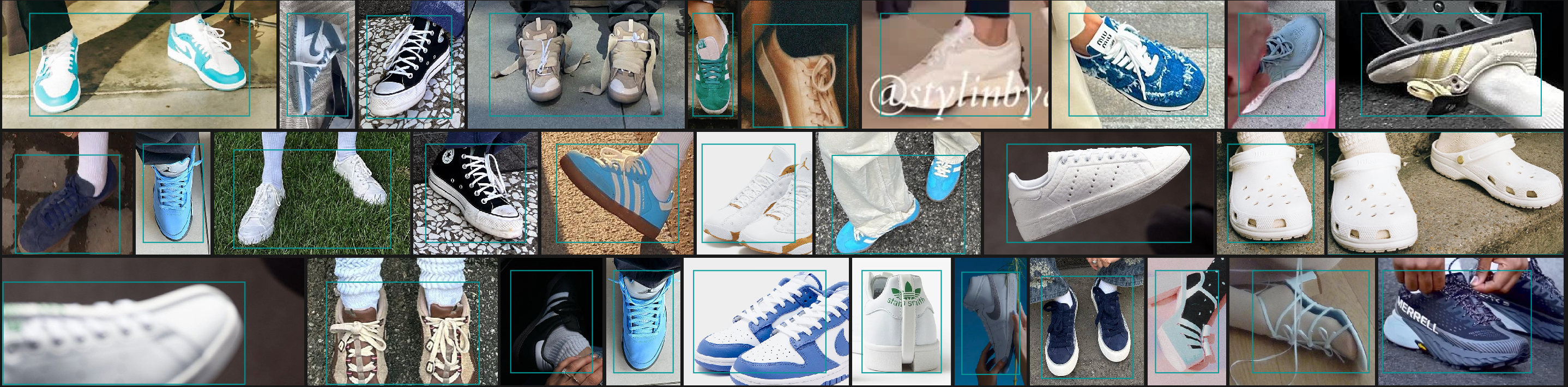

Cluster 24

Top Samples from Cluster 24.

Tail Samples from Cluster 24.

Cluster 233

Top Samples from Cluster 233.

Tail Samples from Cluster 233.

Potential Improvements

It’s important to note that there are several parameters within the MiniBatchKMeans algorithm that could be adjusted to potentially enhance the clustering results. For instance, modifying the batch size, increasing the number of iterations, or altering the number of clusters are all tweaks that could affect performance.

Additionally, the order in which embeddings are appended to create the memory-mapped file can influence the clustering process. In this case, embeddings are appended according to the username and subsequently by the image name. As a result, the mini-batches used during clustering are not random, which could potentially impact the effectiveness of the clustering algorithm.

Nevertheless, given that the assessment of clustering results often relies on subjective judgement, extensive tuning of the clustering algorithm may not be the most efficient use of resources.

The ultimate goal is to ensure that clusters are compact, meaning that they consist of highly similar objects. This ensures that later manual review, where interesting clusters are selected and potentially combined to form groups for display, is based on solid, coherent clusters.

Therefore, as long as the clusters exhibit a high degree of similarity among their objects, the process can proceed to the manual review and selection phase for final group-level display.

Implementation Details

Input

| Name | Description |

|---|---|

ins_posts_object_tags_cleaned.csv | csv file containing objects with both raw and cleaned tags |

ins_posts/<username>/embeddings | Visual embeddings of full images and objects |

Process

| Code | Description |

|---|---|

codes/cluster_analysis/clustering.ipynb | Clustering on selected objects and pick the clusters of interest |

Output

| Name | Description |

|---|---|

ins_posts_clusters.csv | csv file containing selected objects and their clusters |

Data Sample:

{

"batch_folder": "ins_posts_2",

"username": "amandashadforth",

"res_name": "c5d37b4a4b21a1593b1086976d9d92cb.json",

"bbox": "[235.21124267578125, 166.27415466308594, 388.292724609375, 257.6859130859375]",

"tag": "forehead",

"score": 0.6,

"idx": 3,

"image_name": "c5d37b4a4b21a1593b1086976d9d92cb",

"cluster": 29,

"dist": 0.5734329223632812,

"sim": 0.8205487132072449

}