CatFood

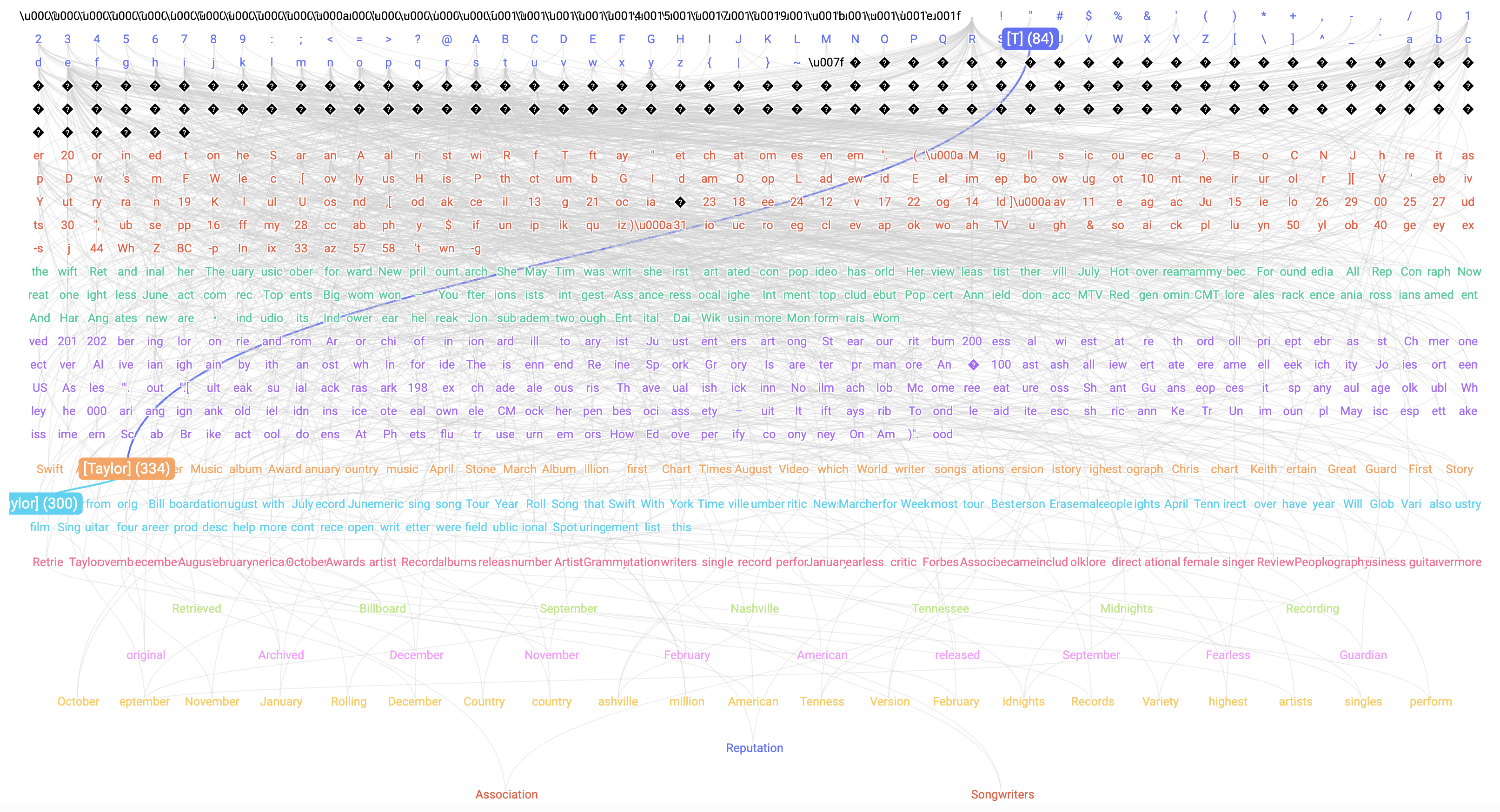

Knowledge Nuggets for minbpe

This post contains the knowledge nuggets I collected while learning minbpe. A big thanks to Andrej Karpathy for his super clean and elegant code—sometimes, a single line of code is worth a thousand words. I also received a lot of help from both ChatGPT and Claude in figuring things out, and they deserve credit for their invaluable assistance.... Read more

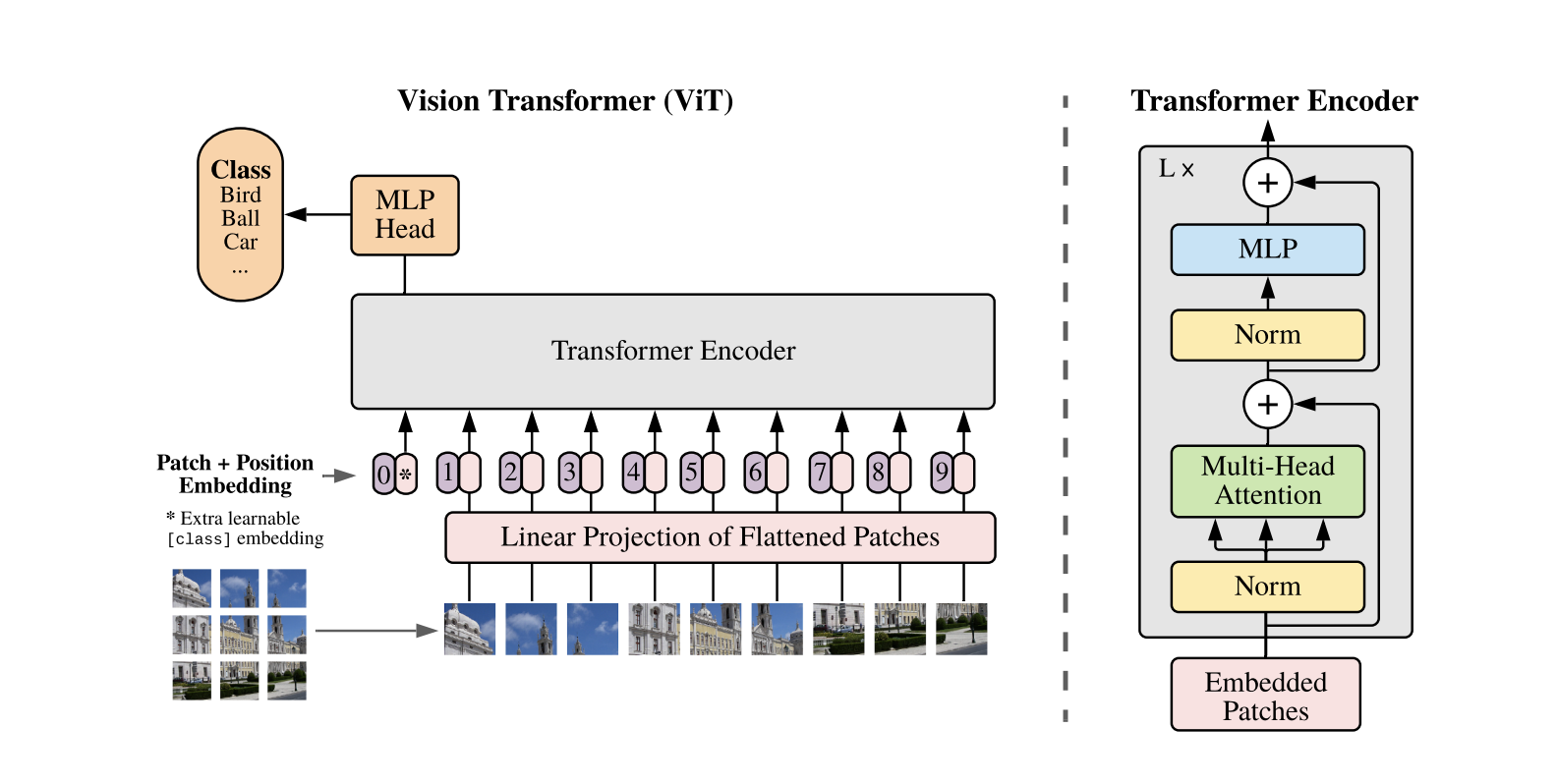

The Annotated Vision Transformer

1. Overview Standard Transformer is applied directly to images, with the fewest possible modification. 1.1. Image to Patch Embeddings The standard Transformer takes a 1D sequence of token embeddings as input. To handle 2D images, an image is split into $N$ flattened patches ($ x_p^1; x_p^2; \ldots; x_p^N $), each with shape $P^2 \dot C$, wher... Read more

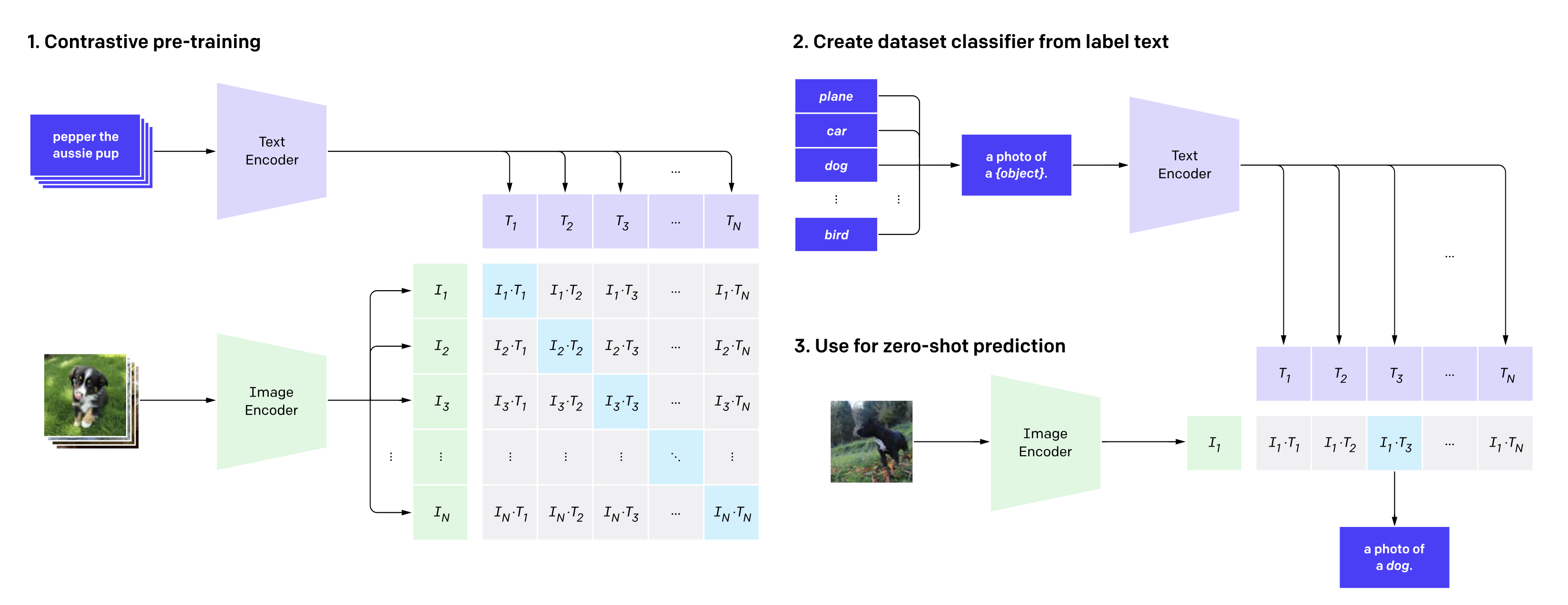

The Annotated CLIP

Launched in early 2021, CLIP (Contrastive Language–Image Pre-training) has sparked numerous creative projects. Its influence in inspiring diverse innovations is noteworthy. Exploring the original paper and its code is highly recommended. This article provides an annotated version of the CLIP paper, examining implementation details using the o... Read more

Human-in-the-Loop Machine Learning and Practical Advice

Play the best part as a human in the machine learning life cycle In March, I got an opportunity to give a talk about real-world machine learning practice for AISG. This is a summary post for the talk. The topic I chose, as stated in the title, is mainly about how “Human” is involved in the machine learning life cycle. It’s greatly inspir... Read more