Table of Content

- Who are the humans in the loop

- Machine learning problem framing

- Important decisions before development

- Annotation design and refinement

- Hidden Facts about annotation

- Is data the new oil?

- Feature Engineering vs. Data “Engineering”

- Data cleaning in practice

- Active Learning

- Monitoring Models in Production

Play the best part as a human in the machine learning life cycle

In March, I got an opportunity to give a talk about real-world machine learning practice for AISG. This is a summary post for the talk.

The topic I chose, as stated in the title, is mainly about how “Human” is involved in the machine learning life cycle. It’s greatly inspired by a book with exactly the same naming, Human-in-the-Loop Machine Learning, written by Robert (Munro) Monarch.

I feel lucky to be able to finish the preview version even before it’s officially published (estimated in May 2021). It’s definitely one of the most insightful machine learning books I’ve read recently. I highly recommend it to anyone who is interested in real-world machine learning practice.

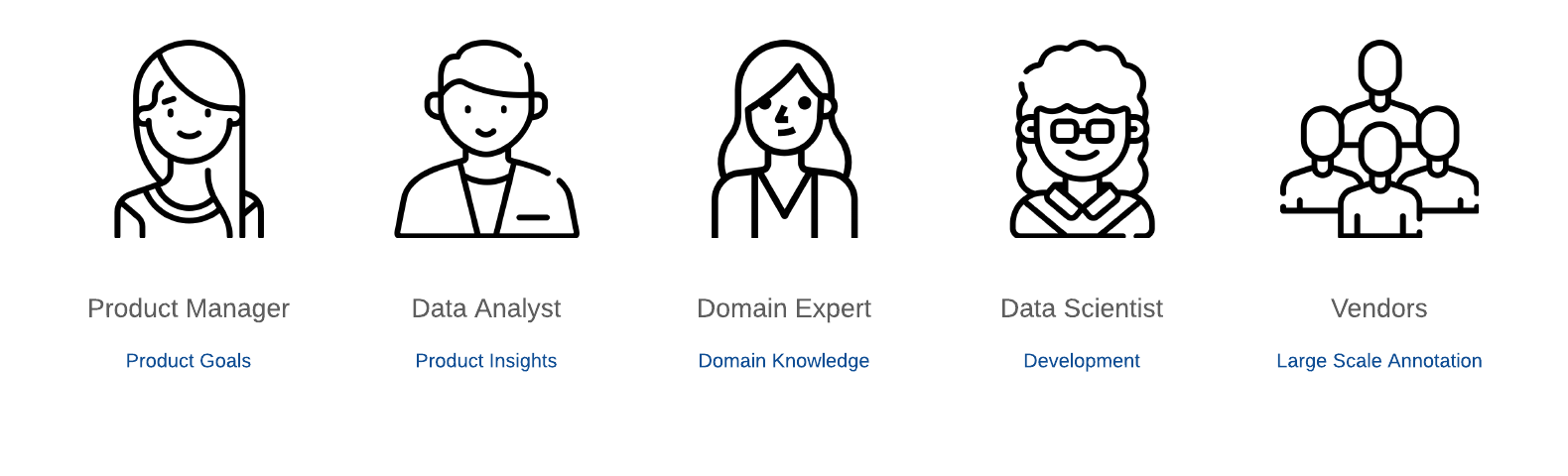

Who are the humans in the loop

In practice, a successful machine learning project team requires a diverse combination of people and skills. Data scientists are just a small part of it. Ideally, there are at least five different roles.

Product managers communicate with customers, collect requirements, and transform useful information into product requirements documents (PRDs). PRD is the starting point of any ML project.

Data analysts draw insights from data to help the team make better decisions. For instance, competitor analysis and market research provide the team with clear benchmarks and the main areas of focus.

Domain experts inject professional knowledge into ML products by transforming unstructured, implicit domain knowledge into structured, explicit materials (taxonomies, annotation guidelines, etc.) for ML product development.

Data scientists are responsible for end-to-end model development, including data preparation, model training, deployment, model monitoring, etc.

Last but not least, given that deep learning algorithms are data-hungry, vendors (outsourced workers) provide large-scale annotation services for training data construction.

Machine learning problem framing

Before diving into ML solution development, it’s worth spending time framing a machine learning problem carefully in a way that it could be solved using ML techniques.

I highly recommend this course created by Google: Introduction to Machine Learning Problem Framing. It provides comprehensive instructions on ML problem framing, including: (quoted from the course objectives)

- Define common ML terms.

- Describe examples of products that use ML and general methods of ML problem-solving used in each.

- Identify whether to solve a problem with ML.

- Compare and contrast ML to other programming methods.

- Apply hypothesis testing and the scientific method to ML problems.

- Have conversations about ML problem-solving methods.

Important decisions before development

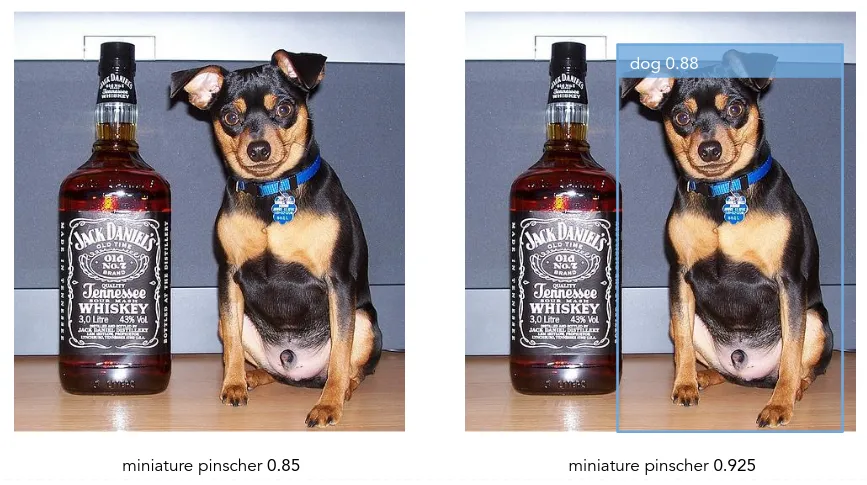

After framing a proper ML problem, there are still a few crucial decisions to make before entering the development stage. We use dog breed identification as an example to showcase the decision-making process.

What is the scope?

Almost all ML models are built under closed-world settings. From the perspective of a model, its “scope” determines its “world”, or its expected capability. Defining a proper scope is the most important decision that affects subsequent choices about data collection strategies, model complexity, evaluation methodologies, etc. It also influences stakeholders’ understanding and expectations. All related parties should participate in the discussion and provide different perspectives.

Potential questions to answer during the discussion:

- What is the target use case? Dog breed identification.

- How many dog breeds in total? 120.

- What are the dog breeds? List of 120 dog breeds.

- Multi-class or multi-label? Multi-class, one dog can only belong to one breed.

- What are the expected image types? Photos. (other possible image types could be sketch, painting, quickdraw, etc. Different image types usually imply different difficulty levels.)

- Is detection included? No. The model won’t be able to properly handle images with multiple dog breeds.

- What are the expected successful scenarios? If there is only one single dog breed (it doesn’t matter if there are multiple dogs) in the input image, the model should be able to recognize the dog breed with high accuracy.

- What would be the possible failure scenarios? Considering there is no object bounding box provided, if there are multiple dog breeds in the input image, the model might perform poorly. Understanding the limitations of a model is as important as understanding its capabilities. Data scientists will be more capable of debugging performance issues if they’ve already thought through all possibilities beforehand. Product managers will surely benefit as they always need to provide valid explanations to clients when things go wrong. Besides, pointing out failure scenarios could inspire the team with new ideas. In this example, the team might think about including a dog detection solution in the plan if they want to handle images with multiple dog breeds.

How does the input look like?

Photos of a single dog or multiple dogs with the same breed.

How does the output look like?

Left: If there is no detection. Right: If detection is included. One possible extension is to output the model confidence level or to require that the prediction score should represent the confidence level.

How to evaluate the performance?

- How to construct a representative evaluation set? For example, the evaluation set should at least contain 10 images per dog breed, cover both indoor and outdoor environments and a wide range of reasonable lighting conditions, etc.

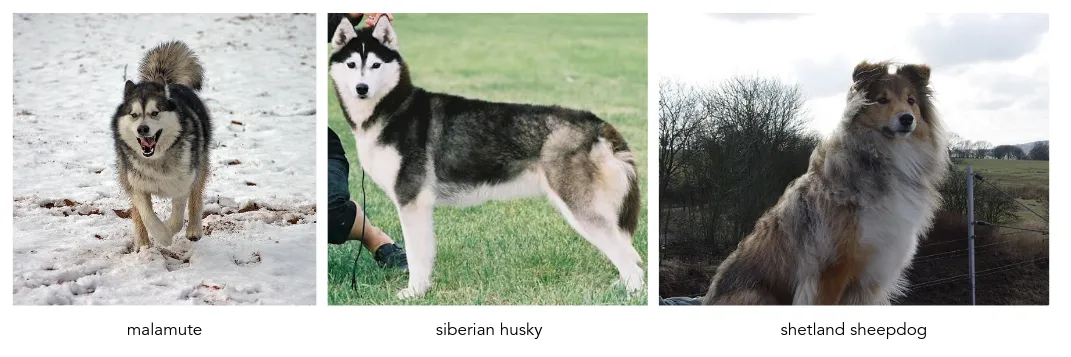

- Are all types of errors equally severe? For instance, is predicting Malamute as Siberian husky as bad as predicting Malamute as Shetland sheepdog? The more severe issues should be prioritized.

- What metrics to use and what is the target? For this example, we require the F1 score per dog breed is at least 0.8, and the weighted (according to the importance) overall F1 score is at least 0.85.

Annotation design and refinement

Annotation puts the “Human” in Human-in-the-Loop Machine Learning. Creating datasets with accurate and representative labels for machine learning is often the most under-estimated component of a machine learning application.

If you’ve only experienced machine learning projects in school, you might not even know how data annotation works. Usually, the datasets, even the splits (train/validation/test), are prepared from the beginning, especially when you are using open datasets such as ImageNet.

Luckily, there are research papers carefully describing their data annotation process. Take ImageNet paper as an example:

To collect a highly accurate dataset, we rely on humans to verify each candidate image collected in the previous step for a given synset. This is achieved by using the service of Amazon Mechanical Turk (AMT), an online platform on which one can put up tasks for users to complete and to get paid.

Similar to the ImageNet project, many academic projects chose crowdsourced workers as their annotation workforce, mainly because using crowdsourced workers allows flexible scaling up and down. Although crowdsourced workers are the most talked-about workforce, they are actually the least used workforce in industries.

While academic research projects mostly focus on quick experiments for different use cases, AI companies aim for “sustained increases in accuracy for one use case” (quoted from the book). From their perspective, it’s more efficient to hire dedicated annotators to produce high-quality data annotation consistently.

In most companies, it is rare for any large, sustained Machine Learning project to rely on crowdsourced workers.

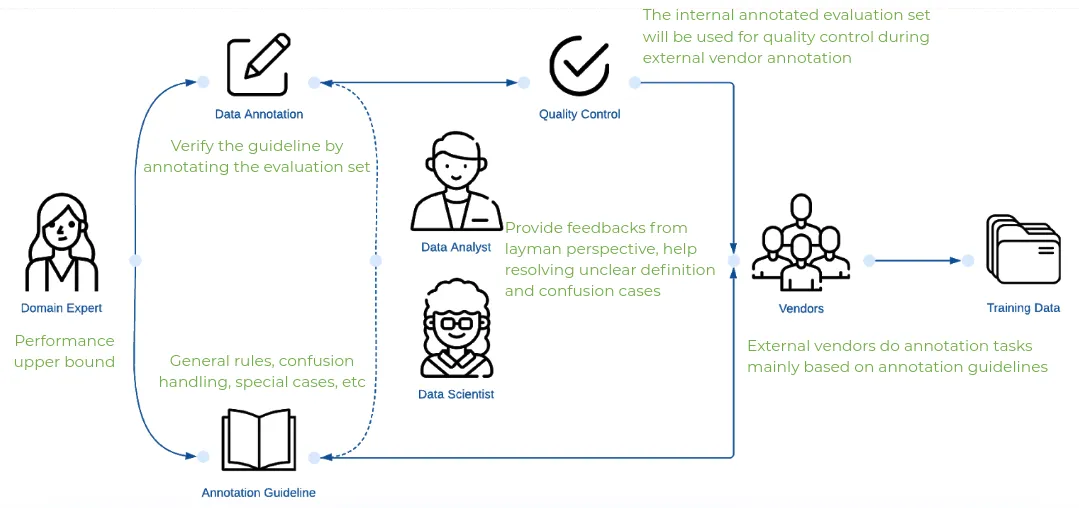

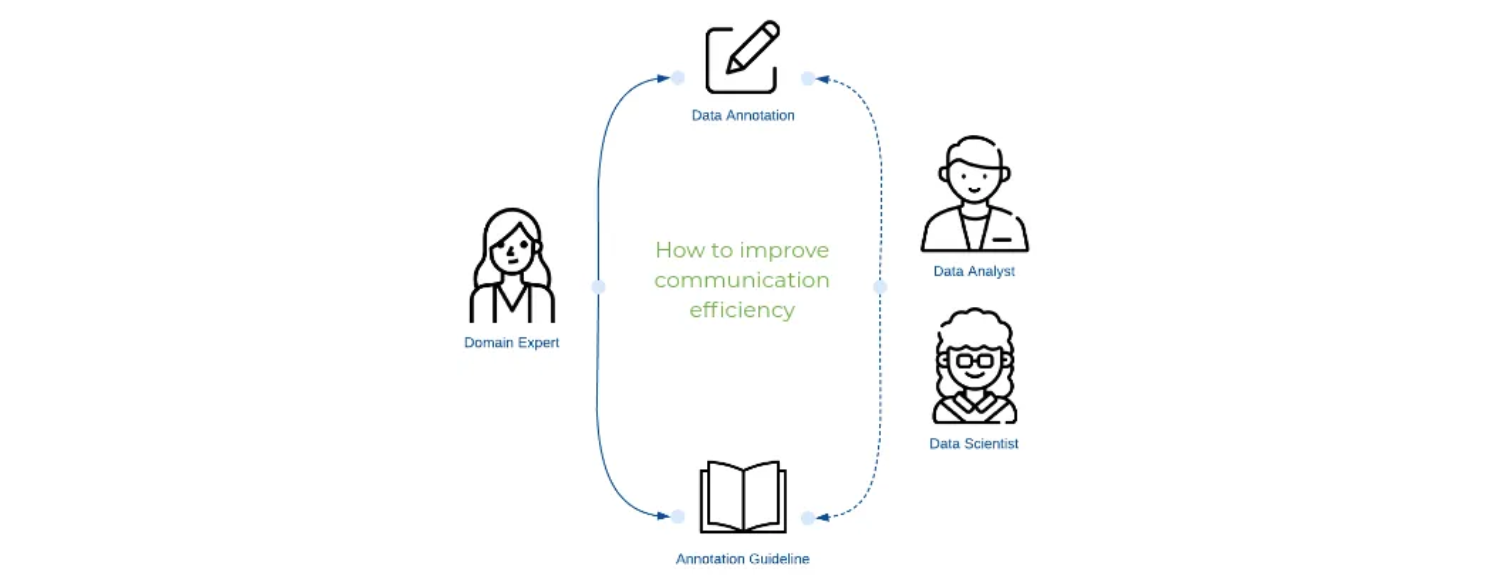

What’s more, real-world data annotation is never a one-time effort. It forms a small loop within itself.

In practice, AI companies mainly rely on three types of annotation workforces.

In-house domain experts

Domain experts are professionals with desired knowledge of specific domains. Healthcare AI companies might hire people with medical backgrounds as their in-house experts. Fashion AI companies might look for fashion designers, while companies focusing on furniture might employ furniture designers.

Domain experts represent the human-level performance of any domain-specific task that we want to develop ML models to perform. The human-level performance is often also considered as the upper-bound ML model performance. Besides setting the standards of correct data labeling, their contributions to data annotation include but not limited to:

- Define the meaning of each label.

- Clarify the boundaries between different labels.

- Design annotation guidelines for layman annotators.

- Obtain feedback from layman annotators and refine the annotation standards.

Project team members

No matter what combination of workforces you use, I recommend running annotation sessions among the greatest possible diversity of internal staff members.

Similar to the tip above, we always suggest conducting small-scale internal annotation sessions before large-scale external annotation. In addition to domain experts, data scientists (sometimes data analysts, or even product managers) are always involved in the internal annotation sessions. In fact, we prefer treating it as a strict requirement rather than a recommended practice because the benefits are obvious.

- To train and diagnose ML models efficiently, data scientists need to equip themselves with prerequisite knowledge for the target tasks.

- By trying out initial annotation tasks on a small scale, data scientists greatly help troubleshoot and provide valuable feedback from the perspective of layman annotators.

Ideally, most of the issues would be discovered and resolved after several rounds of internal annotation. Outsourced vendors could expect high-quality annotation instructions at the time they start working on the annotation tasks.

Outsourced vendors

Outsourced vendors are the major workforce for large-scale data annotation. At the stage of large-scale external annotation, it’s assumed that annotation instructions are well-defined and the annotation tasks have been simplified as much as possible. Therefore, it’s reasonable to believe that vendors are able to produce high-quality annotations although they don’t have comprehensive knowledge of the tasks, simply because it’s not required.

However, vendors’ annotation quality is still considered lower than the internal layman annotators, mainly because they are usually required to annotate a large amount of data under strict time constraints. The quality could be even worse if there is no proper quality control. (They might just annotate randomly.) In practice, in-house annotations are often used for quality control.

Hidden Facts about annotation

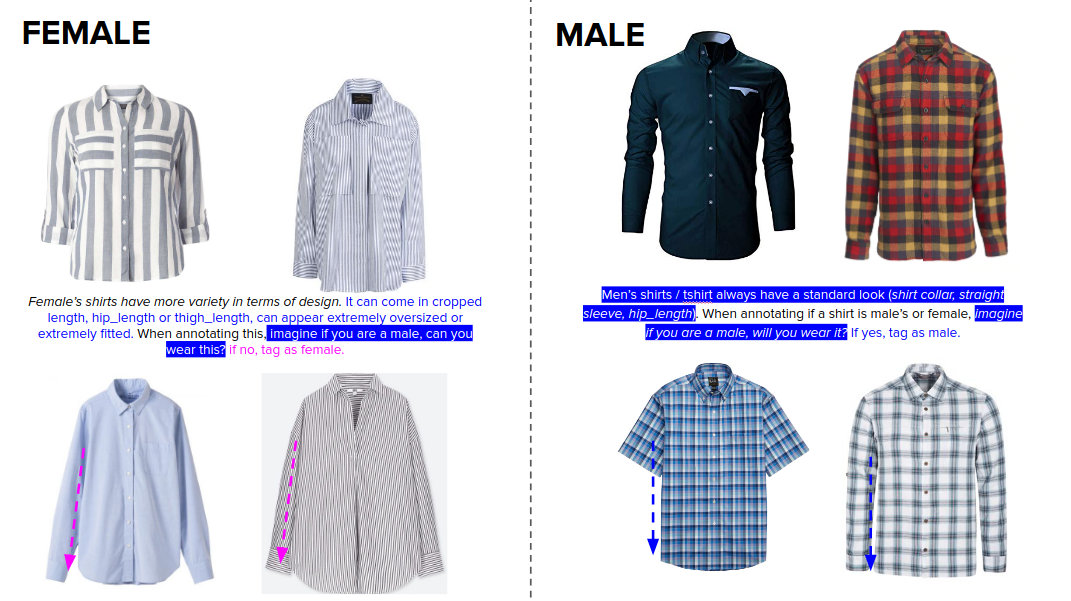

Fact 1: Good annotation guidelines take great effort

Especially when the task is domain-specific.

It’s not surprising that sometimes we are able to perform certain tasks but can’t explain the logic behind. Similarly, domain experts often struggle to transform domain-dependent terms into layman understandable languages. Imagine how you might create instructions for distinguishing between Shiba and Akita.

Below is an example page of our gender recognition annotation guideline. Even describing the difference between female and male products is not as easy as we might think.

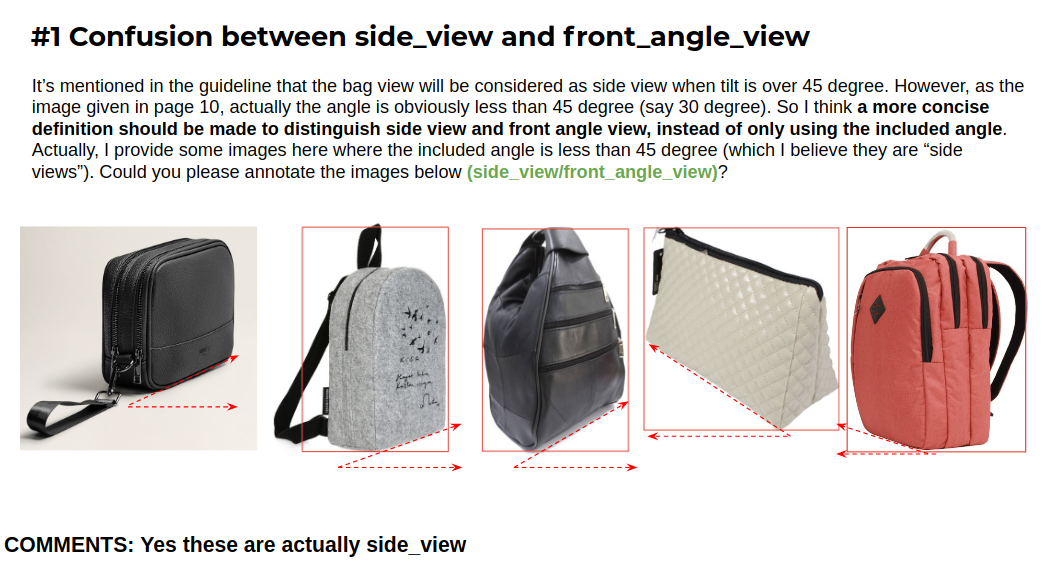

Fact 2: Communication could be a bottleneck

Internal annotation session requires back and forth communication between domain experts and internal annotators. Without carefully designed protocols, communication would soon become a bottleneck, especially when domain experts are in charge of multiple projects in parallel.

Below is an example showing how an internal annotator points out the confusing part in the guideline as well as the comments from the domain expert.

Is data the new oil?

In every industry I have seen, the state-of-the-art model that won long term was the winner because of better training data and not because of new algorithms. This is why having better data is often referred to as a “data moat”: the data is the barrier that prevents your competitors from reaching the same levels of accuracy.

The simple answer is yes.

It might disappoint many people who want to pursue a career in Machine Learning, data scientists actually spend most of their time looking at the data, discovering and fixing data issues. I believe that’s the reason why we are named as data scientists, not software engineers, algorithm engineers, or research scientists, etc. If you are not fond of working with data, being a data scientist is just not your choice.

Also, we shouldn’t take it for granted. Data scientists won’t be able to focus on data without the great efforts from selfless pioneers and contributors. We don’t need to reinvent the wheel because we can easily get started with scikit-learn, PyTorch, TensorFlow, Keras, etc. We benefit greatly from pre-trained models released by academic research groups and big companies so even individuals can build powerful ML models nowadays. It’s also been more and more convenient to access state-of-the-art research results thanks to arXiv, GitHub, etc.

Although dealing with data issues might not sound fancy, it generally contributes the most to performance improvement in practice. In real-world use cases, simply improving data coverage and annotation quality can boost model performance by more than 10% while tuning hyperparameters won’t help that much unless you are switching to a larger model or using a larger input size. Of course, we assume that you understand the commonly known best practices, such as transfer learning and learning rate decay, and already applied them to model training.

Another exception could be, you are experienced and lucky enough to discover a “golden” hyperparameter setting, given that you fully understand the specialty of the task at hand so you have clear ideas about what parameters to adjust. But overall the chance is rare. Instead, I keep seeing people tuning hyperparameters without knowing the logic behind.

You might not see a lot of research papers talking about fixing data issues or improving data quality, since research projects usually don’t have the choice to change data if they want to fairly compared with each other. In practice, we are allowed to modify the data freely as long as it improves model performance. Also, some methods are just too straightforward so there is no need to write a research paper. But it doesn’t mean that they are not effective.

Garbage in, garbage out (GIGO)

High-quality inputs are responsible for high-quality outputs. The training data is the ultimate learning material for any model trained by supervised learning. There is no reason to deny the importance of data.

Feature Engineering vs. Data “Engineering”

As mentioned in the previous session, data scientists still spend most of their time on data preparation nowadays, no matter they are working with traditional machine learning or deep learning algorithms.

Traditional machine learning: Feature Engineering

Before my current job, I worked as a data scientist in a big insurance company. At that time, I worked with structured data and built traditional machine learning models to predict user behaviors, including customer purchase prediction, churn prediction, fault detection, etc.

Since we were predicting human behaviors, labels could be naturally obtained based on facts. There’s no requirement for human annotation. The amount of usable data was usually limited because only a finite set of features were closely related to the prediction target. Even if there could be more potentially useful features, manual feature engineering was inefficient and soon became a bottleneck.

As data scientists, we were fully aware of the power of good features. We put tremendous effort into feature engineering in spite of the tedium. In order to create meaningful features, we frequently communicated with business teams, trying to be inspired by the related business indicators as well as the logic behind. Our boss also proactively talked with various parties to see if we were able to acquire new data sources to improve the overall predictive power.

At that time, the most commonly used machine learning algorithms were logistic regression, decision tree, random forest, XGBoost, etc. All these algorithms can’t do feature extraction on their own. Thus their performance heavily depends on how accurately the features are identified and represented. That’s the main reason why feature engineering is vital for traditional ML algorithms.

Deep learning: Data “Engineering”

Recently, more and more data scientists, including me, switch their careers from traditional ML to deep learning. Not only because deep learning is considered more powerful and on-trend, but also it’s highly likely that people want to get rid of tedious feature engineering, knowing that deep learning algorithms are able to learn features directly from data.

However, there is no free lunch. As I observed, data scientists still spend most of their time on data preparation when working with deep learning algorithms. The only difference is, instead of working on feature engineering, they work on data “engineering”. I use a double quote here because the saying of data “engineering” is just for the sake of matching the term feature “engineering”. It’s not the same as the commonly known data engineering. In other words, although deep learning algorithms can learn features from data, they can only learn good features if there is good and meaningful information in the data. Therefore, data scientists just switch their effort from manually creating good features to producing high-quality data, aka engineering the data. Here are some possible “engineering” points:

- Data collection: how to collect a large amount of representative data

- Data annotation: how to design efficient and accurate annotation tasks

- Data cleaning: how to discover and correct the wrong annotations

- Data enrichment: how to include the most valuable data points

Data cleaning in practice

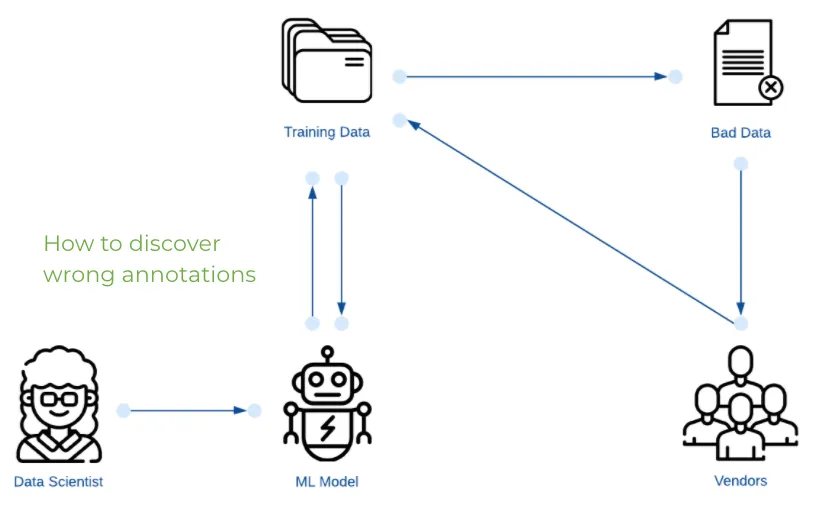

Annotation errors are unavoidable. The goal of data cleaning is to improve the accuracy of existing annotations by discovering and correcting wrong annotations. Here I introduce three simple, practical, and effective methods.

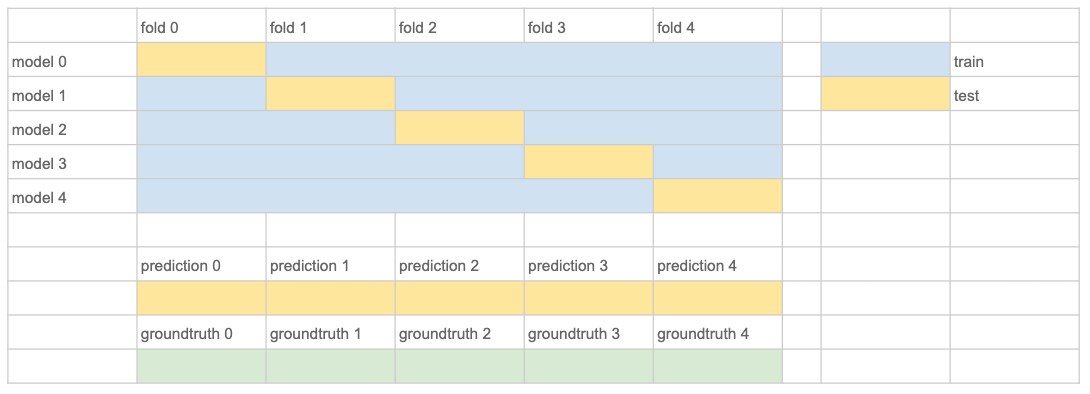

Contradiction based discovery: KFold Cleaning

This method is generally appliable if there are more correct annotations than wrong annotations in the training set. The main idea is to retrieve wrong annotations by a majority vote. In order to obtain unbiased predictions, we need to use KFold cross-validation.

- Get unbiased predictions of the whole training set through k-fold cross-validation. The recommended k here is 3 or 5 (won’t be 10) since it usually takes a long time to train a deep learning model.

- Compare the unbiased predictions with the annotated ground truths.

- Select data points where predictions are different from ground truths, ranking by prediction scores descendingly.

- Select top X data points and create annotation tasks for human correction.

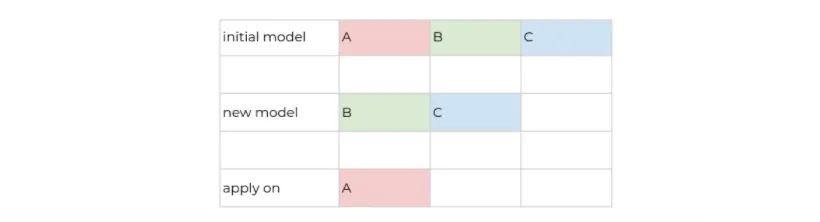

Confusion based discovery: Precision Cleaning

This method can be used if there are a few labels with relatively low precision. Imagine there is a multi-class task with three labels: A, B, C, and A has a very low precision score. Label A has low precision because lots of NOT-A (B, C) is predicted as A. It’s reasonable to deduce that there might be lots of B, C in A’s training set (wrongly annotated as A).

- Train a model only with B, C and apply the model on A, ranking by prediction scores descendingly.

- Select top X data points and create annotation tasks for human verification. Since now all data from A can only be predicted as B or C, we can directly verify whether the predictions are correct or not.

Uncertainty based discovery: Entropy Cleaning

This method is generally appliable if the current model has achieved stable performance. The model is considered stable when its performance won’t fluctuate significantly when there are small changes in parameters or starting conditions. The main idea is to retrieve uncertain data points that are close to the decision boundaries.

- Get unbiased prediction scores of the whole training set through k-fold cross-validation, same as KFold Cleaning.

- For each data point, calculate entropy value using the unbiased prediction scores. Rank training data points by entropy value descendingly.

- Select top X data points with high entropy values for human review because they are close to the decision boundaries.

Active Learning

Besides cleaning up the existing annotations, it almost always helps to obtain more training data. Personally, I consider active learning as a set of strategies to select valuable data points for data enrichment.

I got most of my knowledge of active learning from Human-in-the-Loop Machine Learning, so I would rather just list down my recommendations.

Firstly, I recommend reading the article Active Transfer Learning with PyTorch written by the author (Robert (Munro) Monarch), especially the Before getting started part, where he kindly summarized all his articles about active learning on Medium. For people who want to understand active learning and annotation in detail, I suggest reading the book.

Monitoring Models in Production

I recommend this article from Domino: Maintaining Data Science at Scale. Below is the abstract:

This article covers model drift, how to identify models that are degrading, and best practices for monitoring models in production. For additional insights and best practices beyond what is provided in this article, including steps for correcting model drift, download the “Model Monitoring Best Practices: Maintaining Data Science at Scale” whitepaper.